How to read a file in parallel

Is it possible?

The problem

The problem is as simple as this question:

Is it possible to read a file in parallel?

While it may seem really trivial, once you start thinking about the way a hard drive (or an SSD) works a lot of doubts start to rise.

In order to compare execution times we need a fake task to perform with some fake data. For simplicity, we generate a file with random unsigned integers and we want to find the minimum of them.

The proposed solutions

Reading serially

The most simple way to process a file.

- Read one line

- Perform the computation

- If you’re not at the end of the file, go to step 1

1

2

3

4

5

6

7

8

9

10

unsigned int read_serially(const char *filename) {

unsigned int best = UINT_MAX;

FILE *f = fopen(filename, "r");

unsigned int n;

while (1 == fscanf(f, "%u\n", &n)) {

best = min(best, n);

}

fclose(f);

return best;

}

Partition (logically) the file with pointers

We start with a classic parallel pattern: partition.

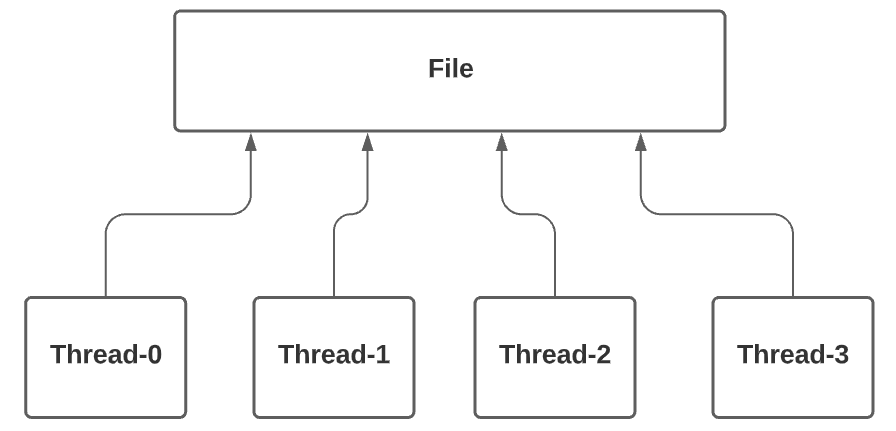

The file is partitioned like an array in the RAM. Each one of the N threads reads \(1/N\) of the file’s content. At the end, a min-reduction is performed to gather the local results.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

unsigned int partition_logically(const char *filename) {

unsigned int best = UINT_MAX;

const long unsigned int length = get_file_size(filename);

#pragma omp parallel default(none) shared(filename, length) \

reduction(min : best)

{

FILE *f = fopen(filename, "r");

const unsigned int idx = omp_get_thread_num();

const long unsigned int portion = length / omp_get_num_threads();

const long unsigned int start = portion * idx;

fseek(f, start, SEEK_SET);

unsigned int n;

for (long unsigned int i = start; i < start + portion; i++) {

fscanf(f, "%u\n", &n);

best = min(best, n);

}

fclose(f);

}

return best;

}

Read different copies at different locations

Create an exact copy of the entire file for each thread and then make that thread read only a portion of it, like if it was partitioned. At the end, a min-reduction is performed to gather the local results.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

unsigned int copy_and_partition(const char *filename) {

unsigned int best = UINT_MAX;

const unsigned int nth = omp_get_max_threads();

const long unsigned int length = get_file_size(filename);

char copies_names[nth][11];

FILE *copies[nth];

FILE *main = fopen(filename, "r");

// Creating copies

for (unsigned int i = 0; i < nth; i++) {

sprintf(copies_names[i], "tmp%02u.txt", i);

copies[i] = fopen(copies_names[i], "w");

}

// Copying content

while (!feof(main)) {

char ch = fgetc(main);

for (unsigned int i = 0; i < nth; i++) {

fputc(ch, copies[i]);

}

}

// Closing files

for (unsigned int i = 0; i < nth; i++) {

fclose(copies[i]);

}

fclose(main);

#pragma omp parallel default(none) shared(length, copies, copies_names) \

reduction(min : best)

{

const unsigned int idx = omp_get_thread_num();

copies[idx] = fopen(copies_names[idx], "r");

const long unsigned int portion = length / omp_get_num_threads();

const long unsigned int start = portion * idx;

fseek(copies[idx], start, SEEK_SET);

unsigned int n;

for (long unsigned int i = start; i < start + portion; i++) {

fscanf(copies[idx], "%u\n", &n);

best = min(best, n);

}

fclose(copies[idx]);

}

return best;

}

Physical partitions

Partition the files into physical smaller files (one for each thread) and then let each thread process the entire small file serially. This one is exactly like the “Logic Partitions” but each one is firstly dumped into a separate file. This should highlight any software-level or OS-level mutex-like behavior related to single files.

At the end, a min-reduction is performed to gather the local results.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

unsigned int split_and_read(const char *filename) {

unsigned int best = UINT_MAX;

const unsigned int nth = omp_get_max_threads();

char copies_names[nth][11];

FILE *copies[nth];

FILE *main = fopen(filename, "r");

// Creating copies

for (unsigned int i = 0; i < nth; i++) {

sprintf(copies_names[i], "tmp%02u.txt", i);

copies[i] = fopen(copies_names[i], "w");

}

// Copying content

long unsigned int line = 0;

unsigned int n;

while (1 == fscanf(main, "%u\n", &n)) {

fprintf(copies[line % nth], "%010u\n", n);

line++;

}

// Closing files

for (unsigned int i = 0; i < nth; i++) {

fclose(copies[i]);

}

fclose(main);

#pragma omp parallel default(none) shared(copies, copies_names) \

reduction(min : best)

{

const unsigned int idx = omp_get_thread_num();

copies[idx] = fopen(copies_names[idx], "r");

unsigned int n;

while (1 == fscanf(copies[idx], "%u\n", &n)) {

best = min(best, n);

}

fclose(copies[idx]);

}

return best;

}

Time comparison

The file generated to take these times contained \(10^8\) numbers.

On SSD

These results come from my PC with 8-core CPU and an SSD.

| Name | Time |

|---|---|

| Serial | 8.768 s |

| Logical partitions | 17.732 s |

| Partition copies | 177.84 s |

| Split and read | 23.872 s |

On HDD

These results come from my PC with 4-core CPU and an HDD.

| Name | Time |

|---|---|

| Serial | 14.347 s |

| Logical partitions | 42.773 s |

| Partition copies | 256.308 s |

| Split and read | 55.722 s |

The final answer

It appears from the results that the serial read is the fastest way to read a file. The logical partitions would have had better results if we didn’t count the time to create N file pointers and to move them.

The (relatively) slight time difference between “Logical partitions” and “Split and read” makes me think that, even with separate files that could be cached in memory separately, the threads still concur on some resource when reading their own file copy. I think that this shared resource might be the hard drive itself, or its controller’s software, which serves reads and writes serially with a FIFO queue. In order to confirm this assumption, I would need to test this code with different file copies placed on entirely different hard drives but, unfortunately, I only have one-drive PCs.

Lastly, we can safely say that the huge time difference between “Partition copies” and all the others is due to the process of creating N full copies of the input file.

NOTE: the source code for this experiment is available here.